Abstract

Four species of grass generate half of all human-consumed calories. However, abundant biological data on species that produce our food remain largely inaccessible, imposing direct barriers to understanding crop yield and fitness traits. Here, we assemble and analyse a continent-wide database of field experiments spanning 10 years and hundreds of thousands of machine-phenotyped populations of ten major crop species. Training an ensemble of machine learning models, using thousands of variables capturing weather, ground sensor, soil, chemical and fertilizer dosage, management and satellite data, produces robust cross-continent yield models exceeding R2 = 0.8 prediction accuracy. In contrast to ‘black box’ analytics, detailed interrogation of these models reveals drivers of crop behaviour and complex interactions predicting yield and agronomic traits. These results demonstrate the capacity of machine learning models to interrogate large datasets, generate new and testable outputs and predict crop behaviour, highlighting the powerful role of data in the future of food.

Access options

Subscribe to Journal

Get full journal access for 1 year

119,01 €

only 9,92 € per issue

Tax calculation will be finalised during checkout.

Rent or Buy article

Get time limited or full article access on ReadCube.

from$8.99

All prices are NET prices.

Data availability

All data are available from the Supplementary Information, the linked database descriptor publication5 uploaded to Scientific Data and the figshare7 repository, after screening under our own extensive imputations and quality controls and freely available for research or non-commercial purposes under a CC-BY-NC 3.0 license. Some data available in this repository5 are, alternately, available as a dataset on the Grains Research and Development Corporation website published under a CC-BY-NC 3.0 AU license. The dataset is based predominantly on data sourced from GRDC and GRDC’s extensive investment in the collection, development and presentation of that dataset is acknowledged; however, the dataset has not been subject to GRDC’s quality control processes, does not include updates and corrections that have been made to the dataset and as such may be unreliable such that results of research based on the dataset should not be relied on for any purpose; and any person wishing to conduct research using the original NVT data must approach GRDC directly with a research proposal, noting that terms and conditions may apply. Alternately, the extensively quality-controlled and imputed figshare repository remains freely accessible for research under a creative commons license7.

References

- 1.

United Nations Department of Economic and Social Affairs. World Population Prospects: 2015 Revision (United Nations, 2016).

- 2.

Burgueño, J., de los Campos, G., Weigel, K. & Crossa, J. Genomic prediction of breeding values when modeling genotype × environment interaction using pedigree and dense molecular markers. Crop Sci. 52, 707–719 (2012).

- 3.

Cabrera-Bosquet, L., Crossa, J., von Zitzewitz, J., Serret, M. D. & Luis Araus, J. High-throughput phenotyping and genomic selection: the frontiers of crop breeding converge. J. Integr. Plant Biol. 54, 312–320 (2012).

- 4.

Zamir, D. Where have all the crop phenotypes gone? PLoS Biol. 11, e1001595 (2013).

- 5.

Newman, S. J. & Furbank, R. T. A multiple species, continent-wide, million-phenotype agronomic plant dataset. Sci. Data 8, 116 (2021).

- 6.

NVT Protocols v1.1. 75 (Grains Research and Development Corporation) https://nvt.grdc.com.au/trials/nvt-protocols (2020).

- 7.

Newman, S. J. & Furbank, R. T. Continent-wide Agronomic Experiment Data (figshare, 2021); https://doi.org/10.6084/m9.figshare.c.5296369

- 8.

Justice, C. O. et al. Land and cryosphere products from Suomi NPP VIIRS: overview and status. J. Geophys. Res. Atmos. 118, 9753–9765 (2013).

- 9.

Cohen, W. B. & Justice, C. O. Validating MODIS terrestrial ecology products: linking in situ and satellite measurements. Remote Sens. Environ. 70, 1–3 (1999).

- 10.

Wan, Z., Zhang, Y., Zhang, Q. & Li, Z.-L. Quality assessment and validation of the MODIS global land surface temperature. Int. J. Remote Sens. 25, 261–274 (2004).

- 11.

Huete, A. et al. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 83, 195–213 (2002).

- 12.

Schroeder, W., Oliva, P., Giglio, L. & Csiszar, I. A. The New VIIRS 375m active fire detection data product: algorithm description and initial assessment. Remote Sens. Environ. 143, 85–96 (2014).

- 13.

Deng, H. Interpreting tree ensembles with inTrees. Int. J. Data Sci. Anal. 7, 277–287 (2019).

- 14.

Breiman, L. & Cutler, A. randomForest: Breiman and Cutler’s random forests for classification and regression. R package version 4.6-14 https://cran.r-project.org/web/packages/randomForest/randomForest.pdf (2012).

- 15.

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

- 16.

Friedman, J. H. Greedy function approximation: a gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001).

- 17.

Breiman, L., Friedman, J. H., Olshen, R. A. & Stone, C. J. Classification and Regression Trees (Chapman and Hall, 1984).

- 18.

Cortes, C. & Vapnik, V. Support-vector networks. Mach. Learn. 20, 273–297 (1995).

- 19.

Steinwart, I. & Thomann, P. liquidSVM. R package version 1.2.4 https://cran.r-project.org/package=liquidSVM (2017).

- 20.

Mevik, B. H. & Wehrens, R. The pls package: principal component and partial least squares regression in R. J. Stat. Softw. https://doi.org/10.18637/jss.v018.i02 (2007).

- 21.

Schymanski, S. J., Or, D. & Zwieniecki, M. Stomatal control and leaf thermal and hydraulic capacitances under rapid environmental fluctuations. PLoS ONE 8, e54231 (2013).

- 22.

Vialet-Chabrand, S. & Lawson, T. Dynamic leaf energy balance: deriving stomatal conductance from thermal imaging in a dynamic environment. J. Exp. Bot. 70, 2839–2855 (2019).

- 23.

Gonzalez-Dugo, M. P. et al. A comparison of operational remote sensing-based models for estimating crop evapotranspiration. Agric. Meteorol. 149, 1843–1853 (2009).

- 24.

Food Balances (2014-) (FAO, 2016); http://faostat3.fao.org/download/FB/FBS/E

- 25.

Sánchez-Azofeifa, A. et al. Estimation of the distribution of Tabebuia guayacan (Bignoniaceae) using high-resolution remote sensing imagery. Sensors 11, 3831–3851 (2011).

- 26.

Furbank, R. T., Sirault, X. R. R. & Stone, E. in Sustaining Global Food Security: The Nexus of Science and Policy (ed. Zeigler R. S.) 203–223 (CSIRO Publishing, 2019).

- 27.

Alcorn, M. A. et al. in Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition 4840–4849 (IEEE, 2019). https://doi.org/10.1109/CVPR.2019.00498

- 28.

Holloway, E. The unlearnable checkerboard pattern. Commun. Blyth Inst. 1, 78–80 (2019).

- 29.

Mohri, M. & Medina, A. M. in Algorithmic Learning Theory (eds Bshouty, N.H. et al.)124–138 (Springer Verlag, 2012).

- 30.

Lehmann, J., Coumou, D. & Frieler, K. Increased record-breaking precipitation events under global warming. Clim. Change 132, 501–515 (2015).

- 31.

Westra, S. & Sisson, S. A. Detection of non-stationarity in precipitation extremes using a max-stable process model. J. Hydrol. 406, 119–128 (2011).

- 32.

Vaze, J. et al. Climate non-stationarity—validity of calibrated rainfall-runoff models for use in climate change studies. J. Hydrol. 394, 447–457 (2010).

- 33.

Verdon-Kidd, D. C. & Kiem, A. S. Quantifying drought risk in a nonstationary climate. J. Hydrometeorol. 11, 1019–1031 (2010).

- 34.

Milly, P. C. D. et al. Climate change: stationarity is dead: whither water management? Science 319, 573–574 (2008).

- 35.

Rosenzweig, C. et al. in Climate Change 2007: Impacts, Adaptation and Vulnerability (eds Parry, M. L. et al.) 79–131 (Cambridge Univ. Press, 2007).

- 36.

Sun, F., Roderick, M. L. & Farquhar, G. D. Rainfall statistics, stationarity, and climate change. Proc. Natl Acad. Sci. USA 115, 2305–2310 (2018).

- 37.

Lenaerts, B., Collard, B. C. Y. & Demont, M. Improving global food security through accelerated plant breeding. Plant Sci. 287, 110207 (2019).

- 38.

McCarl, B., Villavicencio, X. & Wu, X. Climate change and future analysis: is stationarity dying? Am. J. Agric. Econ. 90, 1241–1247 (2008).

- 39.

Towell, G. G. & Shavlik, J. W. Knowledge-based artificial neural networks. Artif. Intell. 1, 119–165 (1994).

- 40.

Bae, J. K. & Kim, J. Combining models from neural networks and inductive learning algorithms. Expert Syst. Appl. 38, 4839–4850 (2011).

- 41.

Jamshidian, M. & Jalal, S. Tests of homoscedasticity, normality, and missing completely at random for incomplete multivariate data. Psychometrika 75, 649–674 (2010).

- 42.

Dong, Y. & Peng, C.-Y. J. Principled missing data methods for researchers. Springerplus 2, 222 (2013).

- 43.

R Core Team. R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, 2013).

- 44.

Wright, M. N. & Ziegler, A. Ranger: a fast implementation of random forests for high dimensional data in C++ and R. J. Stat. Softw. 77, 1–17 (2017).

Acknowledgements

We acknowledge funding from the Australian Research Council Centre of Excellence for Translational Photosynthesis (CE140100015). We wish to acknowledge the hard work of the many researchers and agronomists who collected the historical agronomic data for the Grains Research and Development Corporation National Variety Trials and formerly made these data freely available under a CC-BY-NC license. Without their previous generosity, data reprocessed and used in this work would remain unavailable and invisible, to both scientists and the farmers who fund the NVTs.

Author information

Affiliations

Contributions

S.J.N. conceived and designed the study, wrote the code, performed the analysis, designed and plotted the figures and cowrote the manuscript. R.F. cowrote the manuscript and contributed to the experiment design.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Plants thanks Manuel Marcaida and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Environmental and vegetation patterns captured by remote sensing.

Remote-sensing arrays capture seasonal patterns across a season, from sowing (red lines) through anthesis or flowering (blue lines), to harvest (orange lines), in diverse variables such as photosynthesis and leaf area (top row), vegetation indices and land surface temperatures (second row), cloud cover and raw reflectance values (third to fifth rows), infrared bands, latent heat flux, and fire events (fifth row). Variation shown for a single site (Turretfield 2010; Main Season planting), abbreviations as in Supplementary Table 2.

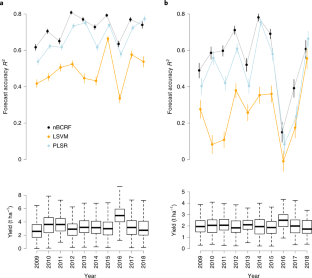

Extended Data Fig. 2 Learning curves for models constructed using progressive seasonal data.

Models constructed to predict yield from a given point in the season relative to sowing (x axis), in a wheat and b canola, trained on 2008–2017 data and used to predict 2018 season data. As the season progresses, later-season data is included and models improve in forecast accuracy (y axes) with xvBCRF models (black) outperforming PLSR models (blue) and LSVMs (orange) over almost all forecast horizons.

Extended Data Fig. 3 Prediction accuracy of BCRF models in diverse phenotypes under random holdout trial prediction.

Using complete season data from 90 days before to 270 days after sowing to train BCRF models, tested in 100 random holdout sites, allows the prediction of key agronomic traits such as: a grain protein content, b time to 50% flowering, and c Glucosinolate oil content (Canola only). However, BCRF models trained under the same criteria were of limited or no use for predicting grain size or volume metrics such as: d Hectolitre weight, e the fraction of grain below 2.0 mm and f thousand-grain weight. Numbers N indicate sample sizes across predicted holdout sites.

Extended Data Fig. 4 Prediction accuracy of annual forecast BCRF models trained using species-specific data.

Prediction accuracy of models trained using data to 200 DAS from within species, rather than cross-species models, provide good accuracy for projecting yield in a Canola (N = 608), b Chickpeas (N = 243), c Faba Beans (N = 188), d Lupins (N = 403), e Oats (N = 216), f Field Peas (N = 230), g Lentils (N = 331), h Triticale (N = 266), and i Wheat (N = 2296). Test data are for the latest observed year containing successful trials in each species.

Extended Data Fig. 5 Model complexity and accuracy excluding individual domains of input data.

Predictive accuracy within models, such as the a RPRM and b extreme gradient boosting models shown, was reduced by different rates under leave-one-out testing. Across all levels of model complexity (x axis), removal of satellite data (blue) produced the greatest reduction in accuracy relative to models trained using all available data (black circles). Exclusion of weather-station data (orange), metadata (pink), and management data (green) imparted similar and more limited costs in accuracy. Crossvalidation error rates (y axis, a) is the inverse of R2 values and RMSE (y axis, b) is the root mean squared error for random holdout observations.

Extended Data Fig. 6 Model complexity and accuracy using single domains of input data.

Predictive accuracy within models, such as the a RPRM and b extreme gradient boosting models shown, was driven by inclusion of certain domains. Relative to models trained using all available data (black circles), satellite data alone (blue) produced models of greater accuracy than weather-station data alone (orange), metadata alone (pink) and management data alone (green) across all levels of model complexity (x axis). Crossvalidation error rates (y axis, a) is the inverse of R2 values, RMSE (y axis, b) is the root mean squared error for random holdout observations.

Extended Data Fig. 7 Pruned decision tree for wheat yield.

Pruned decision tree predicting yield variation (green colour scale, t/Ha shown in boxes) from all input data to 200 DAS, illustrating the readable heuristics generated by tree models. Model accuracy under annual forecast prediction is R2 = 0.79; RMSE = 1.13. Days after Sowing (DAS), Time of Sowing (TOS), Days before sowing (DBS), Middle Infrared (MIR), Enhanced Vegetation Index (EVI), Normalized Differenced Vegetation Index (NDVI), Leaf Area Index (LAI), MODIS Reflection Band (RB), all values rounded to 2 significant digits.

Extended Data Fig. 8 Pruned decision tree for wheat yield trained on data to time of sowing.

A pruned decision tree for wheat yield, constructed using data available at the time of sowing, illustrating the readable output of decision tree models (annual forecast accuracy R2 = 0.3). Cumulative sum (CS), Monoammonium Phosphate (MAP), MODIS middle infrared reflectance band (MIR).

Extended Data Fig. 9 Flowering-window minimum temperatures and yield.

Average wheat yields (y axis) observed for a given variety-site combination exhibit a counterintuitive relationship with frost severity during flowering (here defined as 110–140 Days after Sowing, x axis): more severe frosts predicting higher yields (blue curve; locally weighted smoothed spline; N = 54,658). This may reflect a survival bias resulting from the absence of reporting zero-yielding trials, where only high-yielding crops survive extreme low temperatures.

Extended Data Fig. 10 Distribution of model accuracy from imputation error and noise.

There were substantial differences in the sensitivity of model predictions to imputation noise under both random-sample imputation (orange) and random forest imputation (blue) under forward-projection accuracy (N = 25 prediction models per boxplot; data presented as median values with boxes for IQR and whiskers for 95% CIs). Models with the lowest sensitivity to imputation-based variability were stratified sampling BCRFs and linear support vector machines (LSVMs).

Supplementary information

Supplementary Information

Supplementary Figs. 1–10 and Tables 1–7.

Supplementary Tables

Word file of Tables 1–7 (non-display items).

Supplementary Software

Supplementary Code 1.

Supplementary Software

Supplementary Code 2.

Rights and permissions

About this article

Cite this article

Newman, S.J., Furbank, R.T. Explainable machine learning models of major crop traits from satellite-monitored continent-wide field trial data. Nat. Plants (2021). https://ift.tt/2YnCQfe

-

Received:

-

Accepted:

-

Published:

"machine" - Google News

October 04, 2021 at 10:17PM

https://ift.tt/3DetlO3

Explainable machine learning models of major crop traits from satellite-monitored continent-wide field trial data - Nature.com

"machine" - Google News

https://ift.tt/2VUJ7uS

https://ift.tt/2SvsFPt

Bagikan Berita Ini

0 Response to "Explainable machine learning models of major crop traits from satellite-monitored continent-wide field trial data - Nature.com"

Post a Comment