Abstract

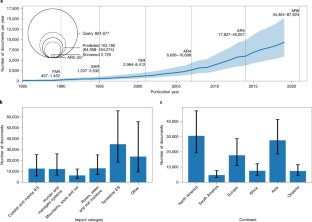

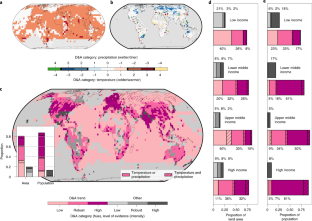

Increasing evidence suggests that climate change impacts are already observed around the world. Global environmental assessments face challenges to appraise the growing literature. Here we use the language model BERT to identify and classify studies on observed climate impacts, producing a comprehensive machine-learning-assisted evidence map. We estimate that 102,160 (64,958–164,274) publications document a broad range of observed impacts. By combining our spatially resolved database with grid-cell-level human-attributable changes in temperature and precipitation, we infer that attributable anthropogenic impacts may be occurring across 80% of the world’s land area, where 85% of the population reside. Our results reveal a substantial ‘attribution gap’ as robust levels of evidence for potentially attributable impacts are twice as prevalent in high-income than in low-income countries. While gaps remain on confidently attributabing climate impacts at the regional and sectoral level, this database illustrates the potential current impact of anthropogenic climate change across the globe.

Access options

Subscribe to Journal

Get full journal access for 1 year

99,00 €

only 8,25 € per issue

Tax calculation will be finalised during checkout.

Rent or Buy article

Get time limited or full article access on ReadCube.

from$8.99

All prices are NET prices.

Data availability

The results of this study are made available in a public repository58.

Code availability

The code used to produce these results is made available in a public repository50.

References

- 1.

Cramer, W. et al. in Climate Change 2014: Impacts, Adaptation, and Vulnerability (eds Field, C. B. et al.) 979–1037 (Cambridge Univ. Press, 2014).

- 2.

IPCC Climate Change 2014: Impacts, Adaptation, and Vulnerability (eds Field, C. B. et al.) (Cambridge Univ. Press, 2014).

- 3.

Hansen, G. The evolution of the evidence base for observed impacts of climate change. Curr. Opin. Environ. Sustain. 14, 187–197 (2015).

- 4.

Haunschild, R., Bornmann, L. & Marx, W. Climate change research in view of bibliometrics. PLoS ONE 11, e0160393 (2016).

- 5.

Grieneisen, M. L. & Zhang, M. The current status of climate change research. Nat. Clim. Change 1, 72–73 (2011).

- 6.

Haddaway, N. R. & Pullin, A. S. The policy role of systematic reviews: past, present and future. Springer Sci. Rev. 2, 179–183 (2014).

- 7.

Callaghan, M. W., Minx, J. C. & Forster, P. M. A topography of climate change research. Nat. Clim. Change 10, 118–123 (2020).

- 8.

Porciello, J., Ivanina, M., Islam, M., Einarson, S. & Hirsh, H. Accelerating evidence-informed decision-making for the Sustainable Development Goals using machine learning. Nat. Mach. Intell. 2, 559–565 (2020).

- 9.

Nunez-Mir, G. C., Iannone, B. V. III, Curtis, K. & Fei, S. Evaluating the evolution of forest restoration research in a changing world: a “big literature” review. New For. 46, 669–682 (2015).

- 10.

Westgate, M. J. et al. Software support for environmental evidence synthesis. Nat. Ecol. Evol. 2, 588–590 (2018).

- 11.

Lamb, W. F., Creutzig, F., Callaghan, M. W. & Minx, J. C. Learning about urban climate solutions from case studies. Nat. Clim. Change 9, 279–287 (2019).

- 12.

Cohen, A. M. An effective general purpose approach for automated biomedical document classification. AMIA Annu. Symp. Proc. 2006, 161–165 (2006).

- 13.

Marshall, I. J., Kuiper, J., Banner, E. & Wallace, B. C. Automating biomedical evidence synthesis: RobotReviewer. In Proc. Association for Computational Linguistics Meeting 7–12 (The Association for Computational Linguistics, 2017).

- 14.

Baclic, O. et al. Challenges and opportunities for public health made possible by advances in natural language processing. Can. Commun. Dis. Rep. 46, 161–168 (2020).

- 15.

Schleussner, C.-F. & Fyson, C. L. Scenarios science needed in UNFCCC periodic review. Nat. Clim. Change 10, 272 (2020).

- 16.

Fankhauser, S. Adaptation to climate change. Annu. Rev. Resour. Econ. 9, 209–230 (2017).

- 17.

Bedsworth, L. W. & Hanak, E. Adaptation to climate change. J. Am. Plann. Assoc. 76, 477–495 (2010).

- 18.

IPCC Special Report on Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation (Cambridge Univ. Press, 2012).

- 19.

Hallegatte, S. & Mach, K. J. Make climate-change assessments more relevant. Nature 534, 613–615 (2016).

- 20.

Conway, D. et al. The need for bottom-up assessments of climate risks and adaptation in climate-sensitive regions. Nat. Clim. Change 9, 503–511 (2019).

- 21.

Hansen, G. & Stone, D. Assessing the observed impact of anthropogenic climate change. Nat. Clim. Change 6, 532–537 (2016).

- 22.

Knutson, T. R., Zeng, F. & Wittenberg, A. T. Multimodel assessment of regional surface temperature trends: CMIP3 and CMIP5 twentieth-century simulations. J. Clim. 26, 8709–8743 (2013).

- 23.

Knutson, T. R. & Zeng, F. Model assessment of observed precipitation trends over land regions: detectable human influences and possible low bias in model trends. J. Clim. 31, 4617–4637 (2018).

- 24.

Nerem, R. S. et al. Climate-change-driven accelerated sea-level rise detected in the altimeter era. Proc. Natl Acad. Sci. USA 115, 2022–2025 (2018).

- 25.

Gudmundsson, L., Leonard, M., Do, H. X., Westra, S. & Seneviratne, S. I. Observed trends in global indicators of mean and extreme streamflow. Geophys. Res. Lett. 46, 756–766 (2019).

- 26.

Padrón, R. S. et al. Observed changes in dry-season water availability attributed to human-induced climate change. Nat. Geosci. 13, 477–481 (2020).

- 27.

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. Preprint at https://arxiv.org/abs/1810.04805 (2019).

- 28.

Sanh, V., Debut, L., Chaumond, J. & Wolf, T. DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. Preprint at https://arxiv.org/abs/1910.01108 (2020).

- 29.

Halterman, A. Mordecai: full text geoparsing and event geocoding. J. Open Source Softw. 2, 91 (2017).

- 30.

Lane, J. E., Kruuk, L. E. B., Charmantier, A., Murie, J. O. & Dobson, F. S. Delayed phenology and reduced fitness associated with climate change in a wild hibernator. Nature 489, 554–557 (2012).

- 31.

Zhang, Y. Q., Yu, C. H. & Bao, J. Z. Acute effect of daily mean temperature on ischemic heart disease mortality: a multivariable meta-analysis from 12 counties across Hubei Province, China. Zhonghua Yu Fang Yi Xue Za Zhi 50, 990–995 (2016).

- 32.

Barry, A. A. et al. West Africa climate extremes and climate change indices. Int. J. Climatol. 38, e921–e938 (2018).

- 33.

Hegerl, G. C. et al. Good practice guidance paper on detection and attribution related to anthropogenic climate change. In Meeting Report of the Intergovernmental Panel on Climate Change Expert Meeting on Detection and Attribution of Anthropogenic Climate Change (eds Stocker, T. F. et al.) (IPCC, 2010).

- 34.

Rosenzweig, C. et al. in Climate Change 2007: Impacts, Adaptation and Vulnerability (eds Parry, M. L. et al.) 79–131 (Cambridge Univ. Press, 2007).

- 35.

Rosenzweig, C. et al. Attributing physical and biological impacts to anthropogenic climate change. Nature 453, 353–357 (2008).

- 36.

Gridded Population of the World, Version 4 (GPWv4): Population Density Revision 11 (CIESIN, 2018).

- 37.

Frank, D. et al. Effects of climate extremes on the terrestrial carbon cycle: concepts, processes and potential future impacts. Glob. Change Biol. 21, 2861–2880 (2015).

- 38.

Schleussner, C.-F. et al. 1.5 °C hotspots: climate hazards, vulnerabilities, and impacts. Annu. Rev. Environ. Resour. 43, 135–163 (2018).

- 39.

Peng, R. D. Reproducible research in computational science. Science 334, 1226–1227 (2011).

- 40.

Müller-Hansen, F., Callaghan, M. W. & Minx, J. C. Text as big data: develop codes of practice for rigorous computational text analysis in energy social science. Energy Res. Soc. Sci. 70, 101691 (2020).

- 41.

Shepherd, T. G. Storyline approach to the construction of regional climate change information. Proc. R. Soc. A 475, 20190013 (2019).

- 42.

Rosenzweig, C. & Neofotis, P. Detection and attribution of anthropogenic climate change impacts. Wiley Interdiscip. Rev. Clim. Change 4, 121–150 (2013).

- 43.

Mengel, M., Treu, S., Lange, S. & Frieler, K. ATTRICI 1.1—counterfactual climate for impact attribution. Geosci. Model Dev. https://doi.org/10.5194/gmd-14-5269-2021 (2021).

- 44.

Gudmundsson, L. et al. Globally observed trends in mean and extreme river flow attributed to climate change. Science 371, 1159–1162 (2021).

- 45.

Diffenbaugh, N. S. Verification of extreme event attribution: using out-of-sample observations to assess changes in probabilities of unprecedented events. Sci. Adv. 6, eaay2368 (2020).

- 46.

Herring, S. C., Christidis, N., Hoell, A., Hoerling, M. P. & Stott, P. A. Explaining Extreme Events of 2019 from a Climate Perspective (American Meteorological Society, 2021).

- 47.

Cochrane Handbook for Systematic Reviews of Interventions (John Wiley & Sons, 2019).

- 48.

Callaghan, M., Müller-Hansen, F., Hilaire, J. & Lee, Y. T. NACSOS: NLP assisted classification, synthesis and online screening. Zenodo https://doi.org/10.5281/zenodo.4121526 (2020).

- 49.

McHugh, M. L. Interrater reliability: the kappa statistic. Biochem. Med. 22, 276–282 (2012).

- 50.

Callaghan, M. Machine learning-based evidence and attribution mapping of 100,000 climate impact studies - code. Zenodo https://doi.org/10.5281/ZENODO.5327409 (2021).

- 51.

Chang, C.-C. & Lin, C.-J. LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27 (2011).

- 52.

Bender, E. M., Gebru, T., McMillan-Major, A. & Shmitchell, S. On the dangers of stochastic parrots: can language models be too big? In Proc. ACM Conference on Fairness, Accountability, and Transparency 610–623 (Association for Computing Machinery, 2021); https://doi.org/10.1145/3442188.3445922

- 53.

Bergstra, J. & Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012).

- 54.

Gururangan, S. et al. Don’t stop pretraining: adapt language models to domains and tasks. Preprint at https://arxiv.org/abs/2004.10964 (2020).

- 55.

Morice, C. P., Kennedy, J. J., Rayner, N. A. & Jones, P. D. Quantifying uncertainties in global and regional temperature change using an ensemble of observational estimates: the HadCRUT4 data set. J. Geophys. Atmos. https://doi.org/10.1029/2011JD017187 (2012).

- 56.

Eyring, V. et al. Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization. Geosci. Model Dev. 9, 1937–1958 (2016).

- 57.

Beusch, L., Gudmundsson, L. & Seneviratne, S. I. Crossbreeding CMIP6 Earth system models with an emulator for regionally optimized land temperature projections. Geophys. Res. Lett. 47, e2019GL086812 (2020).

- 58.

Callaghan, M. et al. Machine learning-based evidence and attribution mapping of 100,000 climate impact studies - data. Zenodo https://doi.org/10.5281/ZENODO.5257271 (2021).

Acknowledgements

M.C. is supported by a PhD stipend from the Heinrich Böll Stiftung. J.C.M. acknowledges funding from the ERC-2020-SyG GENIE (grant ID 951542). S.N. and Q.L. acknowledge funding from the German Federal Ministry of Education and Research (BMBF) and the German Aerospace Center (DLR) via the LAMACLIMA project as part of AXIS, an ERANET initiated by JPI Climate (http://www.jpi-climate.eu/AXIS/Activities/LAMACLIMA, last access: 26 August 2021, grant no. 01LS1905A), with co-funding from the European Union (grant no. 776608). M.R. acknowledges support by the ERC-SyG USMILE (grant ID 85518). R.J.B. acknowledges support from the EU Horizon2020 Marie-Curie Fellowship Program H2020-MSCA-IF-2018 (proposal no. 838667 -INTERACTION). We thank F. Zeng for providing preliminary temperature and precipitation trend assessment results for our project. We acknowledge the World Climate Research Programme, which, through its Working Group on Coupled Modelling, coordinated and promoted CMIP6. We thank the climate modelling groups for producing and making available their model output, the Earth System Grid Federation (ESGF) for archiving the data and providing access and the multiple funding agencies who support CMIP6 and ESGF.

Author information

Affiliations

Contributions

M.C., J.C.M. and C.-F.S. designed the research. M.C. developed the coding platform and machine-learning pipeline to identify studies, with advice from M.R. M.C, C-F.S., G.H., Q.L. and E.T. developed the codebook and coordinated screening and coding. M.C., Q.L., S.N. and C-F.S. conceptualized the link to detection and attribution data. S.N. performed the univariate detection and attribution analysis of temperature and precipitation trends and assessment of internal variability, in consultation with T.R.K, who designed the methodology for these calculations. M.C. and S.N. designed and implemented the matching of studies with detection and attribution data. M.C., C-F.S., S.N., Q.L, G.H., E.T., M.A., R.J.B., M.H., C.J., K.L., A.L., N.v.M., I.M., P.P. and B.Y. contributed to screening and coding studies. M.C., C-F.S., J.C.M., Q.L. and S.N. wrote the manuscript with contributions from all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Climate Change thanks Abeed Sarker and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 A visual representation of the workflow of our machine learning assisted attribution map.

Squares represent documents (not to scale), boxes represent the steps taken. Documents are screened by hand, and those labels are used to generate predictions and machine label documents. These machine-labelled documents are matched by location with information from observations and climate models on the detection and attribution of trends in temperature and precipitation.

Extended Data Fig. 2 Nested cross validation (CV) procedure for the binary relevance classifier.

Models are fit using training documents and evaluated on validation/test documents. The inner CV loop is used to search for optimal hyperparameter settings, which are then evaluated on the outer test sets.

Extended Data Fig. 3 Performance metrics for the binary inclusion/exclusion classifier.

Each pair of dots represents the scores for a distinct cross-validation fold. Horizontal lines show the mean score across folds.

Extended Data Fig. 4 Receiver operating curve area under the curve scores (ROC AUC) and F1 scores for the classification of impact categories.

Each pair of dots represents the scores for a distinct cross-validation fold. Horizontal lines show the mean score across folds.

Extended Data Fig. 5 Receiver operating curves area under the curve scores (ROC AUC)(ROC) and F1 scores for the classification of drivers.

Each pair of dots represents the scores for a distinct cross-validation fold. Horizontal lines show the mean score across folds.

Extended Data Fig. 6 Geographical distribution of surface trends.

Temperature from 1951 to 2018 (left) and precipitation trends from 1951 to 2016 (right) in (a),(b) observations and (c),(d) CMIP6 10-model ensemble mean all-forcing runs. Bottom panels (e),(f) show observations categorised into attribution categories, following refs. 8,7, respectively. Observed cooling/warming or drying/wetting trends that–after accounting for internal climate variability–are inconsistent with the simulated response to natural forcings but consistent with the simulated response to both natural and anthropogenic forcings are indicated by categories -/+2. This is clearest case of changes that are at least partially attributable to anthropogenic forcing, according to the CMIP6 ensemble. Categories -/+1 have detectable observed changes, but are not assessed as attributable to anthropogenic forcing because the observed changes are significantly less than those simulated in the average all-forcing runs. Categories -/+3 have detectable changes and are assessed as at least partly attributable anthropogenic forcing, although the observed changes are inconsistent with the all-forcing runs. That is, they are in the same direction as, but are significantly stronger than, the mean of the all-forcing runs. Categories -/+4 represents cooling/warming or drying/wetting trends that are inconsistent with the simulated response to natural forcings but whose sign is opposite to that of the average simulated all-forcing response; category 0 represents trends that are not distinguishable from natural variability alone. Categories -/+4 and 0 are considered to be examples of non-detectable trends).

Extended Data Fig. 7 Fractional difference between average CMIP6 modeled low-frequency standard deviation of annual mean precipitation vs observed precipitation.

To estimate the internal low-frequency variability for both models and observations, the observed time series were detrended and low-pass filtered with a 7-year running mean filter prior to computing the standard deviations while for the models we used the full available control runs (7-yr running mean filtered) to estimate the internal low-frequency variability for each model. The top panel shows the multi-model ensemble standard deviation comparison while the ten individual panels below it show the comparison for each individual CMIP6 model used in the study. The fraction difference was computed as: [(Model st. dev. - Observed st. dev.) / (Observed st. dev.)].

Extended Data Fig. 8 Difference between average CMIP6 modeled low-frequency standard deviation (°C) of annual mean surface air temperature vs observed surface temperature.

To estimate the internal low-frequency variability for both models and observations, the observed time series were detrended and low-pass filtered with a 7-year running mean filter prior to computing the standard deviations while for the models we used the full available control runs (7-year running mean filtered) to estimate the internal low-frequency variability for each model. The top panel shows the multi-model ensemble standard deviation comparison while the ten individual panels below it show the comparison for each individual CMIP6 model used in the study.

Extended Data Fig. 9 An illustration of the spatial resolution and weighting methodology.

Detection and attribution categories for temperature in East Africa; b. the number of grid cells of each type in Sudan; c. weighted studies for each grid cell in Sudan; d. The number of studies referring to each extracted geographical location in Sudan.

Supplementary information

Supplementary Information

Query and Supplementary Fig. 1.

Supplementary Data

A list of the categories used to code relevant documents. Each category could be used as an impact or a driver. To make the classification problem tractable, the categories were merged into ‘broad categories’, resembling those used in IPCC AR5, and ‘aggregated categories’, which distinguish between different types of impacts to a greater extent.

Rights and permissions

About this article

Cite this article

Callaghan, M., Schleussner, CF., Nath, S. et al. Machine-learning-based evidence and attribution mapping of 100,000 climate impact studies. Nat. Clim. Chang. (2021). https://ift.tt/3BsB9vg

-

Received:

-

Accepted:

-

Published:

"machine" - Google News

October 11, 2021 at 10:06PM

https://ift.tt/3iSZhQB

Machine-learning-based evidence and attribution mapping of 100000 climate impact studies - Nature.com

"machine" - Google News

https://ift.tt/2VUJ7uS

https://ift.tt/2SvsFPt

Bagikan Berita Ini

0 Response to "Machine-learning-based evidence and attribution mapping of 100000 climate impact studies - Nature.com"

Post a Comment