Most original equipment manufacturers automate their industrial machinery using proven PLC or dedicated controller technologies for a good reason: They provide the ultimate in reliability. And while the capabilities and ease of use for these traditional platforms have marched forward, they still lag behind many of the features delivered by common consumer-grade technologies.

Today, software developers for the consumer market take advantage of open source code to enable connectivity, databases, visualization and more. Established developers in the industrial operational technology (OT) arena also are finding applications where they would like to use some of these same open source characteristics. Until recently, industrial platforms have not been conducive to allowing these components to snap in so easily.

Machine automation end-users now will find there are options for adding open source projects to enable new features for dedicated industrial control platforms, empowering them to create advanced functionality combined with traditional dependability. This also opens the door for implementers to engage a new generation of developers, designers and engineers already familiar with open source. In short, open source can help OEMs and end users develop high performance solutions.

Why Open Source?

In recent years, a growing contingent of consumers have discovered microcontrollers like the Raspberry Pi and Arduino to create all sorts of automation and information applications. Sometimes these users are known as the “maker” community, and their undertakings are called “homebrew” projects. Some consider this the foundation of the Internet of Things (IoT).

Microcontrollers can be a good development platform in many cases. Implementers are able to mix and match many types of programming languages and constructs to fit the need. Furthermore, much of the coding environment is built upon and draws from open source projects.

For instance, instead of creating a basic operating system or purchasing a proprietary version, Raspberry Pi users benefit from a proven distribution of Linux, developed and improved over decades. Communications are based on standard wired Ethernet and Wi-Fi, using secure firewall-friendly protocols. Users can create code in C++, Python or many other languages, and if they require a database they can select from various options. All these elements are free and supported by large development communities.

While microcontroller platforms like Raspberry Pi are very capable, they are typically not robust and reliable enough for critical industrial applications. However, they do provide a basis for how successful open sourcing can contribute to industrial applications.

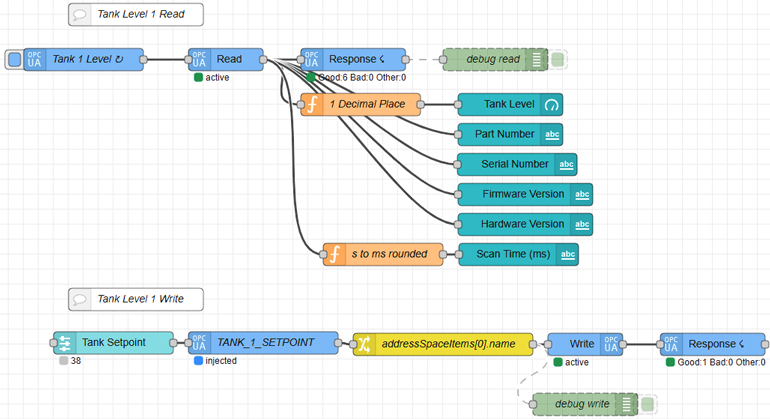

Open source elements like the Node-RED visual programming tool can be very useful for communicating industrial automation data.Emerson

Open source elements like the Node-RED visual programming tool can be very useful for communicating industrial automation data.Emerson

Industrial Benefits of Open Source

To gain some insight into how open source can improve industrial automation, it can be helpful to reflect on how and why early hardwired automation progressed to PLCs.

Prior to the rise of commercially viable PLCs in the early 1980s, automation was largely performed with pneumatic components and electrical devices like relays. Large control schemes, such as for machine or elevator logic, required enormous hardwired relay and timer cabinets. These were complicated to design, expensive to build and troublesome to maintain.

PLCs effectively virtualized physical relays into software and made most of the real-time control functions library-based. Instead of reinventing fundamentals each time as specific tangible instances, users could select from standard commands and easily replicate their work. While PLC platforms themselves were proprietary, they enabled open platform concepts of library objects and reusability.

More than Homebrew

Building on these ideas, today’s industrial users are incorporating IT-capable open source technology with their OT-based machine automation systems. To a great extent, this is driven by the desire to incorporate Industrial IoT (IIoT) communication with machine automation, which had typically been mostly standalone.

Open source offers a flexible way to communicate machine data out to remote and cloud-based systems. Other good uses cases are adding advanced operational and analytical computing ability right at a machine. Open source can also enable lightweight dashboard visualization of operational information and key performance indicators (KPIs).

These open source elements are a good fit for industrial machinery applications:

- Ubuntu Linux: A general-purpose operating system for hosting other software

- Cockpit: Lightweight remote Linux server manager

- Docker: Engine for virtualizing and carefully managing application “containers”

- Portainer: Toolset for managing Docker environments

- Node-RED: Graphical programming tool for configuring event-driven data flows

- Influx DB: Time-series database

- Grafana: Analytics and visualization software (dashboards, trends, charts, graphs)

- OPC UA: Common industrial communications protocol specification

- MQTT: Lightweight Ethernet transport

Choosing traditional industrial development environments can be viewed akin to ordering a takeout dinner from a limited menu. Open source, on the other hand, is a little like opening your stocked refrigerator and selecting the ingredients so you can cook up exactly what you want. Takeout is certainly workable, but making one’s own meal can be cheaper, healthier, tastier and more satisfying.

So the question becomes, how does a typical OT controls engineer incorporate these open source concepts, often associated with the IT group, in a simple and reliable way?

Containerized open source applications are easier for users to manage and deploy, even on edge-located hardware, compared with multiple operating systems on traditional PCs.Emerson

Containerized open source applications are easier for users to manage and deploy, even on edge-located hardware, compared with multiple operating systems on traditional PCs.Emerson

Marrying IT with OT

Some enterprising users bridged the IT/OT gap by adding consumer microcontrollers to classic PLC automation. This can work well as a proof of concept but may not deliver the desired reliability for mass deployment.

A better option is emerging, as some suppliers have curated software applications and developed automation platforms directly supporting the use of open source software on industrial-grade systems.

For example, Emerson has assembled an IIoT application enablement platform called PACEdge. Industrial end-users benefit from this type of software stack because the most useful and relevant apps are gathered under a single installation.

Under this model, much of the applications are operated in containers. Just as virtualization allows many independent and self-sufficient operating systems to run on one computing platform, containers enable many individual applications to operate under one operating system.

Containerization makes it easier for users to manage multiple applications and allows each application to run securely, but with regulated options for interacting with other applications. Each application carries all of its necessary dependencies so it can operate in a standalone manner, providing good flexibility.

The operating systems, container environment and applications are arranged so they can be accessed and managed through graphical user interfaces instead of just command lines. This makes it easier for OT personnel to work with this more IT-oriented software.

Support is simplified by offering this open source package with version control, standard configurations, and a factory reset option. To be clear, the configuration is not locked, so the tinkering community can supplement and update the software at will, evolving it over time. However, there is always a clean way to fall back to a known-good configuration.

These features alleviate one of the greatest concerns with implementing open source compared to closed ecosystems—scaling. Semi-custom deployments are useful for single, specific installations but are often seen as an administrative problem whey they must be scaled up and deployed widely. This pain is relieved by standardized configurations and platforms.

The Emerson RX3i CPL410 edge controller operates as both a PLC and as an edge-located general-purpose computer, ideally positioned to run open IIoT enablement applications.Emerson

The Emerson RX3i CPL410 edge controller operates as both a PLC and as an edge-located general-purpose computer, ideally positioned to run open IIoT enablement applications.Emerson

Running on the Edge

A standard IIoT software stack can be deployed to many target platforms. It can run on a small Linux microcontroller to act as a gateway, or on a full consumer-grade or industrial PC to deliver much higher computing, connectivity and storage performance.

A more compelling target platform for machine automation is an industrial edge controller, which uses hardware virtualization to provide a general-purpose Linux computing environment alongside a deterministic PLC-like system, with secure and high-speed communications between the two assured via OPC UA.

Edge controller hardware is built to withstand the rigors of machine and manufacturing service. The open source IIoT elements are positioned for direct low-latency access to data sourced by the deterministic control side and can communicate this data to supervisory IT-based systems. In addition, the open source computing can inform the deterministic side about user-selected settings and optimized operational parameters.

Open Source in Action

One manufacturer supplies intelligent industrial pneumatic systems, able to interact with supervisory PLC automation over networked connections.

To help users achieve the best value from these smart components, the manufacturer developed an IIoT software stack solution hosted in this case by an industrial PC instead of an edge controller, so as to access the extended data available from components.

This value-add IIoT offering runs in parallel with the end-user’s automation and pneumatic components. It provides a plug-in way to identify air leaks, indicate operational status and deliver predictive maintenance for items like cylinder seals.

Open IIoT applications can make it easy for users to develop edge-enabled analytical and visualization solutions, such as this example tracking air consumption.Emerson

Open IIoT applications can make it easy for users to develop edge-enabled analytical and visualization solutions, such as this example tracking air consumption.Emerson

Open Machine Automation Drivers

Machine OEMs and end-users alike are facing challenges when adopting the latest technologies in efficient ways while preserving reliability. In addition, the incoming workforce is more familiar with modern open software systems and may be resistant to legacy platforms.

These concerns can be alleviated by open source computing options, delivered via an IIoT software stack and hosted by edge controllers or industrial computing devices.

Offered as a standard package, end-users can take advantage of common templates to build applications and speed development time. This helps end-users and OEMs develop high-performance machine automation OT solutions incorporating the latest IT advancements.

Kyle Hable is a product manager responsible for integrating and advancing IIoT and edge technology across Emerson’s machine automation solutions product portfolio. He has spent his entire career connecting data and embedded things to Ethernet networks.

Every year, during Amazon’s annual planning process, leaders in every business unit are asked a pointed question: How do you plan to leverage machine learning in your business?

The words “we don’t plan to” are not an acceptable answer, said Swami Sivasubramanian, vice president of Amazon AI at Amazon Web Services.

Speaking from a virtual stage at the Collision from Home conference, Sivasubramanian told the audience that the world has already entered the golden age of artificial intelligence and machine learning.

The online conference was orchestrated by the producers of the world’s largest tech conference, Web Summit, and boasted more than 30,000 attendees (June 23-25). It was arguably the ideal platform for a cloud-based, live meet-up to discuss long-term trends in the digital world and for Sivasubramanian’s talk, “No hype: Deploying real-world machine learning.”

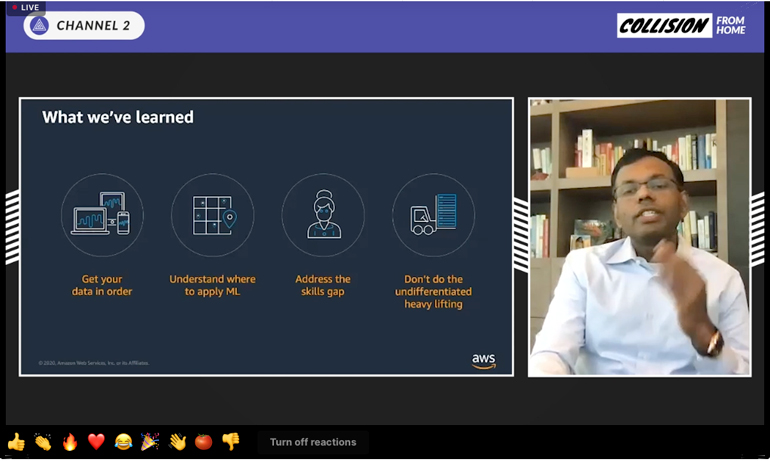

Swami Sivasubramanian, vice president of Amazon AI at Amazon Web Services counts down four pitfalls associated with machine learning.Machine Design

Swami Sivasubramanian, vice president of Amazon AI at Amazon Web Services counts down four pitfalls associated with machine learning.Machine Design

ML has seen traction across industries and supply chains, and there are no shortages of examples of CIOs and CEOs professing how AI and ML are transforming business for the better, said Sivasubramanian. His list of illustrations included the financial sector, where Intuit uses machine learning to forecast their contact center volume as a way to staff up; the medical field, where Aidoc leverages AI and computer vision to build systems that assist radiologists with image scans; and the public sector, where agencies such as NASA use ML algorithms to explore extreme conditions associated with superstorms. (NASA partners with AWS to detect solar flares based on signal anomalies that occur in space.)

Amazon’s own journey goes back more than 20 years, when the digital conglomerate (today worth $1.3 trillion) started using machine learning technology for its supply chain, fulfillment centers and last-mile delivery. Amazon’s Echo voice-controlled smart speaker device is just a recent example of a product with development roots inside Amazon Lab126 dating back 10 years.

Back then, Jeff Bezos and his leadership team began to realize that machine learning was about to go through a pivotal moment. “With the advent of new technologies, such as deep learning on the horizon, they started realizing that every line of business is going to need to have a machine learning strategy,” said Sivasubramanian.

Swami Sivasubramaniam, vice president of Amazon AI at Amazon Web Services.AmazonSince then, explained Sivasubramanian, every line of business at Amazon—irrespective of whether they are running technology, research, human resources, finance or supply chain—is asked to consider how it can enhance the customer experience using machine learning in a meaningful way. Along the way Amazon learned a few lessons about applying ML successfully, all of which Sivasubramanian demarcates as four pitfalls.

Swami Sivasubramaniam, vice president of Amazon AI at Amazon Web Services.AmazonSince then, explained Sivasubramanian, every line of business at Amazon—irrespective of whether they are running technology, research, human resources, finance or supply chain—is asked to consider how it can enhance the customer experience using machine learning in a meaningful way. Along the way Amazon learned a few lessons about applying ML successfully, all of which Sivasubramanian demarcates as four pitfalls.

- Get data in order.

- Understand where to apply machine learning.

- Address the skills gap.

- Don’t do the undifferentiated heavy lifting.

Four Machine Learning Pitfalls

At Amazon, Sivasubramanian’s professional repertoire extends to bootstrapping the NoSQL database ecosystem, as well as the AWS stack: ML algorithms (deep learning frameworks and ML algorithms); ML platform services; and AI application services such as Lex (rich conversational experiences), Polly (text to speech service) and Rekognition (image processing service). Culled from his online presentation and edited for clarification, the following tactical pointers can be applied to avoid obstacles.

1. Get Data in Order

When data scientists are asked to name the biggest impediment when it comes to machine learning, they say “data,” asserts Sivasubramanian. “More than 50% of data scientists spend their time in data wrangling, annotation, ETL and so forth,” he said, noting that the way to avoid this and accelerate machine learning, is to ask three questions: What data is available today? How can it be made easily available so that you can get started? And in a year’s time, what data will we wish we had so that we can start collecting today, and so that we continue to build a durable advantage for years to come?

2. Understand Where to Apply Machine Learning

Picking the right business problem is important, said Sivasubramanian, who compartmentalizes them along three dimensions: data readiness, business impact and machine learning applicability. “Machine learning algorithms and research have come a long way in solving the problems,” he said. “If you pick a problem where the data is not ready and machine learning research hasn’t been developed enough to solve this problem, but it is high business impact, you can throw a lot of resources at it.

“But if you force a deadline, it’s going to lead to frustrated data scientists. On the other hand, if you pick a low-business-impact problem but high data and machine learning applicability, it could be a good prototype to build experience. Ideally, what you want is a problem that scores high on these three dimensions because it’s a great place to start.”

Sivasubramanian advised against building a group of technical experts in machine learning and placing them in a separate team without any contact with domain experts. “What typically happens is that technical experts tend to build interesting proof-of-concepts with no take off from business,” said Sivasubramanian, adding that the ideal team comprised of domain experts and technical experts will “work backwards from the customer and build something meaningful.”

3. Address the Skills Gap

There are not enough people who know machine learning, said Sivasubramanian. He pointed to World Economic Forum data, which shows that jobs such as artificial intelligence and machine learning specialists or data scientists are forecasted to be among the most in-demand roles across most industries by 2022. Amazon started addressing this demand through its Machine Learning University about six years ago when it started training engineers and product managers, said Sivasubramanian.

4. Don’t do the Undifferentiated Heavy Lifting

The final pitfall, according to Sivasubramanian, is that organizations become excited about solving undifferentiated heavy lifting and, by extension, fall prey to the idea of building a machine learning platform, a translation engine or a contributors’ engine.

In a 2006 speech, “We Build Muck, So You Don’t Have To,” Jeff Bezos defined “undifferentiated heavy lifting” as server hosting, bandwidth management, contract negotiation, scaling and managing physical growth, as well as dealing with the accumulated complexity of heterogeneous hardware and co-ordinating large teams to manage each of these areas.

Sivasubramanian offers this advice: “What you ideally want is your engineers to focus on things that matter to the business and leverage things from clouds, such as AWS, or from open-source technologies, and solve the purely differentiated business problem.”

Avoiding these pitfalls will set enterprises up for a future of machine learning where “businesses move from being reactive to proactive, to automate their processes from manual to automated processing, and from generalized customer experience to personalized experiences, and to taking technology from being obscure to being accessible,” he said.

"machine" - Google News

July 01, 2020 at 12:28AM

https://ift.tt/3eQxlsC

For Machine Builders, It's Open Season - Machine Design

"machine" - Google News

https://ift.tt/2VUJ7uS

https://ift.tt/2SvsFPt

Bagikan Berita Ini

0 Response to "For Machine Builders, It's Open Season - Machine Design"

Post a Comment