If a person in the developing world severely fractures a limb, they face an impossible choice. An improperly healed fracture could mean a lifetime of pain, but lengthy healing time in traction or a bulky cast results in immediate financial hardship.

Pacific Northwest National Laboratory (PNNL) machine learning scientists leaped into action when they learned they could help a local charity whose treatments allow patients in the developing world to walk within one week of surgery—even when fractures are severe.

For more than 20 years, the Richland, Washington-based charity SIGN Fracture Care has pioneered orthopedic care, including training and innovatively designed implants that speed healing without real-time operating room X-ray machines. During those 20 years, they've built a database of 500,000 procedure images and outcomes that serves as a learning hub for doctors around the world. Now, PNNL's machine learning scientists have developed computer vision tools to identify surgical implants in the images, making it easier to sort through the database and improve surgical outcomes.

Uniting worldwide medical data

The partnership between PNNL and SIGN was born when data scientist Chitra Sivaraman struck up a conversation with a SIGN employee during a volunteer event. In her day job, Sivaraman and her team members have used machine learning to automatically identify clouds or assess the quality of sensor data, so she immediately understood how machine learning techniques could make quick work of understanding trends in the half million images in SIGN's database.

Sivaraman recruited a multidisciplinary team and applied for funding through Quickstarter, a PNNL program where staff vote to award internal funding to worthy projects that stretch beyond some of PNNL's core capabilities.

"It was funded so fast, I wished I'd asked for more!" Sivaraman said. "I think my colleagues were excited by the opportunity for PNNL's machine learning scientists to use their image classification knowledge to solve a real-world problem for a great cause."

Computational chemist, Jenna Pope joined the team, followed by Edgar Ramirez, a Washington State University intern with aspirations to attend medical school. Together, they harnessed deep learning techniques to address the database's biggest challenge: a huge variety of image types and quality.

Supervising the computer's learning

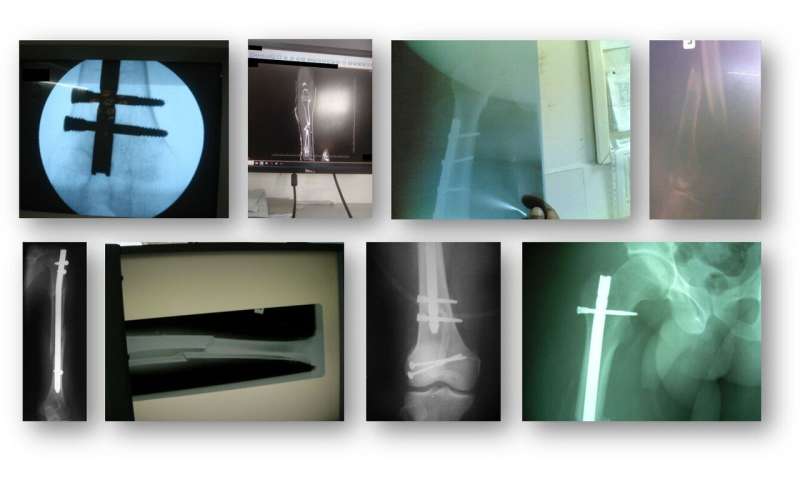

Most of the time, when scientists develop deep learning techniques, they have a perfect set of images that are the same size and orientation. However, the size and scope of SIGN's database included useful images that did not conform to a standard.

First, the team had to teach the computer to distinguish between photos of people and images of X-rays. This was tricky because in addition to multiple pictures of patients, busy doctors upload photos of X-rays without a standard orientation. Additionally, sometimes the pictures of X-rays were shot in a way that included distractions, such as the clinic in the background.

Lacking good initial examples, the team had to teach the computer to focus on the implants and not do things like mistake the fingers holding the X-ray image for one of the implant's screws.

Once the team had enough usable images, SIGN helped them identify implants using annotated images. The team trained the computer model to detect different implants by drawing bounding boxes around the parts of the implants in 300 images.

It was painstaking work, but it paid off. Because the model learned what to look for in those 300 images, it could reliably identify the nails, screws, and plates in the individual implants from a larger selection of database images.

More applications for computer vision

Next, Sivaraman and her team would like to train their tool to recognize the image's quality and automatically prompt a doctor to upload a usable image. Currently, SIGN's founder, Dr. Zirkle, manually approves hundreds of images a day. Automating database image approval would free up time for him to focus on teaching or other tasks.

The goal of orthopedic surgeons throughout the world is to enable fracture healing, and there are many variables when evaluating not only if a fracture has healed, but if the fracture will heal. Eventually, PNNL's machine learning scientists could expand the tool to measure other, non-X-ray healing indicators or refine the tool to sort pre-operation X-rays by bone type and fracture location, helping doctors to more quickly identify the procedures that lead to better outcomes.

The work with SIGN's database is part of PNNL's expertise creating machine learning algorithms that can accurately classify large data collections using very few examples. This expertise includes computer vision techniques that look for cancer in diagnostic images or detect toxic pathogens in the soil, with many other potential applications for national security. This project demonstrates PNNL's technical capabilities in classifying X-ray images to support research in national security, materials science, and biomedical sciences.

The results of the collaboration between PNNL and SIGN are available online in the open source Journal of Medical Artificial Intelligence.

Explore further

Citation: Machine learning scientists teach computers to read X-ray images (2020, September 30) retrieved 30 September 2020 from https://ift.tt/3l4Nttb

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.

"machine" - Google News

September 30, 2020 at 09:13PM

https://ift.tt/3l4Nttb

Machine learning scientists teach computers to read X-ray images - Medical Xpress

"machine" - Google News

https://ift.tt/2VUJ7uS

https://ift.tt/2SvsFPt

Bagikan Berita Ini

0 Response to "Machine learning scientists teach computers to read X-ray images - Medical Xpress"

Post a Comment